The 3 parts of an SVM

The SVM consists of 3 "tricks" combined to give one of the most influential and arguably state-of-the-art machine learning algorithm. The 3rd, "kernel trick" is what gives SVM its power.During the talk I will refer to Cortes and Vepnik's 1995 paper.

- A linear classification problem, ie, separating a set of data points by a straight line / plane. This is very simple.

- Transforming the primal optimization problem to its dual via Lagrangian multipliers. This is a standard trick that does not result in any gain / loss in problem complexity. But the dual form has the advantage of depending only on the inner product of input values.

- The kernel trick that transforms the input space to a high dimensional feature space. The transform function can be non-linear and highly complex, but it is defined implicitly by the inner product.

1. The maximum-margin classifier

The margin is the separation between these 2 hyper-planes:

$ \bar{w}^* \cdot \bar{x} = b^* + k $

$ \bar{w}^* \cdot \bar{x} = b^* - k $

so the maximum margin is given by:

$ m^* = \frac{2k}{|| \bar{w}^* ||} $

The optimization problem is to maximize $m^*$, which is equivalent to minimizing:

$$ \max (m^*) \Rightarrow \max_{\bar{w}} (\frac{2k}{ || \bar{w} || })

\Rightarrow \min_{\bar{w}} (\frac{ || \bar{w} || }{2k}) \Rightarrow \min_{\bar{w}} ( \frac{1}{2} \bar{w} \cdot \bar{w} ) $$subject to the constraints that the the hyper-plane separates the + and - data points:

$$ \bar{w} \cdot (y_i \bar{x_i} ) \ge 1 + y_i b $$

This is a convex optimization problem because the objective function has a nice quadratic form $\bar{w} \cdot \bar{w} = \sum \bar{w_i}^2$ which has a convex shape:

2. The Lagrangian dual

The Lagrangian dual of the maximum-margin classifier is to maximize:$$ \max_{\bar{\alpha}} ( \sum_{i=1}^{l} \alpha_i - \frac{1}{2} \sum_{i=1}^{l} \sum_{j=1}^{l} \alpha_i \alpha_j y_i y_j \bar{x_i} \cdot \bar{x_j} ) $$subject to the constraints:

$$ \sum_{i=1}^l \alpha_i y_i = 0, \quad \alpha_i \ge 0 $$

Lagrangian multipliers is a standard technique so I'll not explain it here.

Question: why does the inner product appear in the dual? But the important point is that the dual form is dependent only on this inner product $(\bar{x_i} \cdot \bar{x_j})$, whereas the primal form is dependent on $(\bar{w} \cdot \bar{w})$. This allows us to apply the kernel trick to the dual form.

3. The kernel trick

A kernel is just a symmetric inner product defined as:$$ k(\mathbf{x}, \mathbf{y}) = \Phi(\mathbf{x}) \cdot \Phi(\mathbf{y}) $$By letting $\Phi$ free we can define the kernel as we like, for example, $ k(\mathbf{x}, \mathbf{y}) = \Phi(\mathbf{x}) \cdot \Phi(\mathbf{y}) = (\mathbf{x} \cdot \mathbf{y} )^2 $.

In the Lagrangian dual, the dot product $(\bar{x_i} \cdot \bar{x_j})$ appears, which can be replaced by the kernel $k(\bar{x_i}, \bar{x_j}) = (\Phi(\bar{x_i}) \cdot \Phi(\bar{x_j}))$.

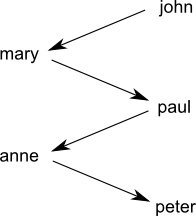

Doing so has the effect of transforming the input space $\mathbf{x}$ into a feature space $\Phi(\mathbf{x})$, as shown in the diagram:

But notice that $\Phi$ is not explicitly defined; it is defined via the kernel, as an inner product. In fact, $\Phi$ is not uniquely determined by a kernel function.

This is a possible transformation function $\Phi$ for our example:

$$ \Phi(\mathbf{x}) = (x_1^2, x_2^2, \sqrt{2} x_1 x_2) $$It just makes $ \Phi(\mathbf{x}) \cdot \Phi(\mathbf{y}) $ equal to $ (\mathbf{x} \cdot \mathbf{y} )^2 $.

Recall that the inner product induces a norm via:

$ ||\mathbf{x}|| = \sqrt{\mathbf{x} \cdot \mathbf{x}} $

and the distance between 2 points x and y can be expressed as:

$ d(\mathbf{x},\mathbf{y})^2 = || \mathbf{x - y} ||^2 = \mathbf{x} \cdot \mathbf{x} + \mathbf{y} \cdot \mathbf{y} - 2 \mathbf{x} \cdot \mathbf{y}$

Thus, defining the inner product is akin to re-defining the distances between data points, effectively "warping" the input space into a feature space with a different geometry.Some questions: What determines the dimension of the feature space? How is the decision surface determined by the parameters $\alpha_i$?

Hilbert Space

A Hilbert space is just a vector space endowed with an inner product or dot product, notation $\langle \mathbf{x},\mathbf{y}\rangle$ or $(\mathbf{x} \cdot \mathbf{y})$.The Hilbert-Schmidt theorem (used in equation (35) in the Cortes-Vapnik paper) says that every self-adjoint operator in a Hilbert space H (which can be infinite-dimensional) forms an orthogonal basis of H. In other words, every vector in space H has a unique representation of the form:

$$ \mathbf{x} = \sum_{i=1}^\infty \alpha_i \mathbf{u}_i $$This is called a spectral decomposition.

* * *

As a digression, Hilbert spaces can be used to define function spaces. For example, this is the graph of a function with an integer domain:

with values of $f(1), f(2), f(3), ... $ on to infinity. So, each graph corresponds to one function.

Imagine an infinite dimensional space. Each point in such a space has dimensions or coordinates $(x_1, x_2, ... )$ up to infinity. So we can say that each point defines a function via

$$f(1) = x_1, \quad f(2) = x_2, \quad f(3) = x_3, ... $$

In other words, each point corresponds to one function. That is the idea of a function space. We can generalize from the integer domain ($\aleph_0$) to the continuum ($2^{\aleph_0}$), thus getting the function space of continuous functions.

End of digression :)